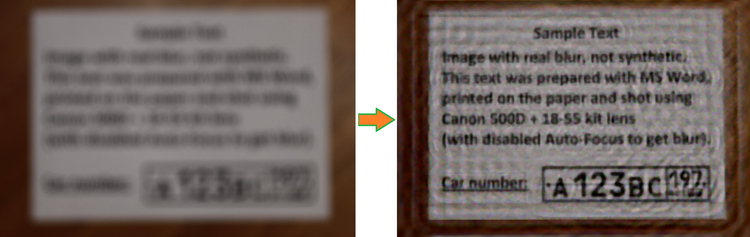

Whenever I see such amazing examples, I am struck by sceptisism:

1. Is the image degraded by a real, relevant blurring (such as out of phocus lense, camera movement), or by a synthetic degradation that satisfy some ideal property (such as space-invariance, noise-free etc)

2. How much manual work is needed to estimate/guide an algorithm in finding the optimal PSF estimate?

3. Even though the ability to "zoom and enhance" until readability is amazingly improved, the subjective qualities of the result leaves a lot to be desired: it looks definately "processed".

In my own photos, I may want some degree of blur in out-of-focus parts of the scene (googled example

http://net.onextrapixel.com/wp-content/ ... assdew.jpg), and for stuff that is moving fast (googled example:

http://api.ning.com/files/UKR6RTdFcZk1r ... tos16.jpeg ). It is a part of my "artistic reportoire" if one may use fancy terms. I may also operate at the limit where image noise is barely tolerable. So the question is if fancy deconvolution based methods can be used, automatically or semi-automatically to make my images "better", both in the objective sense of "less error compared to what the image file would be like if I had used a 20kg stand and $10000 lab-grade lenses and had nailed focus" and in the subjective sense "looks more real/prettier to me".

Seems to me that one would ideally have a complete description of camera PSF at all apertures, focal lengths, distances, wavelengths etc _and_ some non-linear, adaptive thingy that compensates for the deviations from the general behaviour one might encounter in any given image, then recover assumed image information weighted against artifacts/noise amplification in some mean-squared-error (or more elaborate HVS sense). Then you need to be able to blend recovered image with the unprocessed image so as to keep artistic intention/satisfy individual taste for enhancement vs artifacts.

Perhaps easier (but less academically interesting) to buy the more expensive camera gear and improve ones skill in using it

-h